Birth: September 9, 1956. Haifa, Israel.

Education: Technion, B.Sc. (computer science), 1980; Princeton University, M.S.E. (computer science) 1981; M.A. (computer science),1982; Ph.D. (computer science), 1983.

Experience: Herbert H. Maass Professor, School of Mathematics, Institute for Advanced Study, Princeton, New Jersey (1999–present); Hebrew University of Jerusalem (1986–2003); Visiting positions: Princeton University (1990–1992, 1995–1996), Institute for Advanced Study (1995–1996), Mathematical Sciences Research Institute, Berkeley (1985–1986), IBM Research, San Jose (1984–1985), U.C. Berkeley (1983–1984).

Honors and Awards (selected): Rolf Nevanlinna Prize (1994); International Congress of Mathematicians Plenary Lecture (2006); Conant Prize (2008); Gödel Prize, with O. Reingold and S. Vadhan (2009); Member, American Academy of Arts and Sciences (2011); Member, National Academy of Sciences (2013); ACM Fellow (2018); Donald E. Knuth Prize (2019); Member, Norwegian Academy of Science and Letters (2021); Abel Prize, with L. Lova?sz (2021); Edsger W. Dijkstra Prize in Distributed Computing (2023); Honorary Doctorate, Technion (2023); ACM A.M. Turing Award (2023).

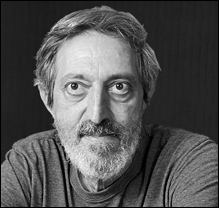

Avi Wigderson

For foundational contributions to the theory of computation, including reshaping our understanding of the role of randomness in computation and mathematics, and for his decades of intellectual leadership in theoretical computer science

Avi Wigderson was born in 1956, a child of holocaust survivors. He grew up with his two brothers near the beach in Haifa, Israel. After completing his mandatory military service, Wigderson joined the relatively young computer science department at the Technion in Haifa in 1977. Computer science in Israel was rooted in mathematics and the faculty there, particularly Shimon Even, fostered his interest in theoretical computer science. At the Technion he met his future wife Edna Rothenstein with whom he would have three children.

Wigderson earned his PhD from Princeton in 1983 under the supervision of Richard Lipton. He then held postdoctoral research positions at the University of California, Berkeley, and IBM Research in San Jose before joining the faculty at the Hebrew University in Jerusalem.

The 1970s were an exciting time for theoretical computer science. Avi Wigderson quickly made his research career by taking ideas built in that decade in entirely new directions.

Understanding Hard Problems

Suppose you are asked to find a group of 200 people on a social network where every person is friends with each other—this is known as a clique. You could attempt to check all possible groups of 200 people, but there are so many combinations that it would take an impractical amount of time. This issue is central to a famous problem in computer science: the P versus NP problem.

The P vs NP question asks whether there exists an efficient algorithm that can solve problems like finding cliques, finding the shortest route that visits cities in any order, or coloring a map with three colors so no neighboring countries have the same color. Turns out if you have a quick algorithm for one of these NP problems, then you have one for all of them. Since the P vs NP question was discovered in the 1970s by Steve Cook, Leonid Levin and Richard Karp, researchers have been unable to determine whether these problems can be solved efficiently.

Much of Avi Wigderson’s work is inspired by the P versus NP problem. Wigderson took an approach based on circuit complexity—you could show NP problems are hard by showing they cannot be computed by small circuits.

In 1990, Wigderson and Mauricio Karchmer made a remarkable connection between circuit complexity and communication complexity. In communication complexity, two people each hold different parts of an input and aim to exchange the fewest bits possible to answer a question about it. They developed a specific communication problem that reflects the depth of a circuit—the time it takes for any signal to travel through it. This approach provided new insights and limits on the power of circuits.

A few years later Wigderson used these tools for studying the perfect matching problem, where we want to determine if a group of people can be paired off so that each pair are friends. Together with Ran Raz, Wigderson demonstrated that perfect matches cannot be found by small-depth circuits that lack negations (NOT gates). Wigderson has explored both sides of this question: In 1986 with Richard Karp and Eli Upfal, he showed that matching can be solved efficiently in parallel, meaning it can indeed have small-depth circuits if NOT gates are allowed, truly exhibiting the power of negation to accelerate computation.

Universal Cryptography

The late 1970s and early 1980s marked a groundbreaking shift in cryptography, “changing this ancient art into a science,” in research honored in three separate Turing awards as Whitfield Diffie and Martin Hellman; Ronald Rivest, Adi Shamir and Len Adleman; and Shafi Goldwasser and Silvio Micali established the mathematical foundations of modern cryptography. Their work enabled secure encryption methods, leading to protocols for applications like secure online voting, playing poker over the phone, and calculating an average salary without revealing individual salaries.

These solutions remained ad hoc, requiring unique designs for each application. In the 1980s, Wigderson took this work to the next level, developing general methods that worked for a broad range of applications. With Oded Goldreich and Silvio Micali, Wigderson showed all verification problems have “zero-knowledge” proofs. For instance, if Bob is attempting a Sudoku puzzle and Alice knows the solution, Alice can convince Bob with high confidence that a solution exists without revealing any part of it. This technique applies to any NP problem, including the Traveling Salesman and Map Coloring problems. Later researchers built on these ideas to create protocols for proving identity without exposing a secret key—essential for the secure smart chips in our credit cards. The same team also designed the first general-purpose method to play games involving hidden information, such as poker, bridge, or complex auctions, fairly and securely online. This method maintains security even if a small number of cheating players try to collude.

Taming Randomness

Like his work on complexity and cryptography, Wigderson’s contributions to probabilistic computing built upon foundational work of the 1970s.

Consider trying to test whether a given number is prime, a key step for modern cryptographic protocols. You could try all the possible factors but large numbers have too many potential factors to try. In the 1970s, Robert Solovay and Volker Strassen, among others, developed an efficient randomized algorithm for testing primality, and John Gill developed a full complexity theory for probabilistic computation.

In practice computers don’t flip coins, they create fake randomness by looking at outputs of a complicated function. These pseudorandom generators were ad hoc without a strong theoretical foundation. In the late 1980s, Wigderson and his colleagues discovered a formal method for creating pseudorandom generators from computationally hard functions. Wigderson, in a series of papers co-authored with Noam Nisan, Russell Impagliazzo and others, showed how to use suitably hard functions to create strong pseudorandom generators whose outputs are computationally indistinguishable from true random bits. The outputs of these generators can replace the randomness in any probabilistic algorithm.

At the turn of the century, Avi Wigderson, by then a professor at the Institute of Advanced Study, continued to make advances in understanding randomness. Imagine trying to connect several cities with a minimal number of roads, so that by taking a random road out of each city, you’d quickly end up in a completely random city. Such networks are called “expander graphs”. Together with Omer Reingold and Salil Vadhan, Wigderson developed a new “zig-zag” construction for creating these graphs—a clever recursive method with an elegantly simple proof of correctness.

The zig-zag construction led to an algorithm that could tell, using a small amount of memory, whether two cities on a map were connected. Reingold’s algorithm used the zig-zag construction to make the cities close together if they were connected at all. In this new map, you can just look at all short trips from one city to see if it reaches the other.

Expanders also have applications for coding techniques able to automatically correct the errors introduced when bits get garbled in transmission, as often happens over wireless links. Using error-correcting codes a sender expands the message in a specific way that allows the receiver to recover the original message even if a small number of characters have been altered. Stronger bounds on expanders lead to more efficient error-correcting codes.

Expanders serve as a starting point for extracting randomness out of weak sources. Suppose you need truly random bits but you only have access to data on sunspot activity which is variable but far from uniformly random. Through several papers and collaborators, Avi Wigderson devised ways to accomplish this, either by adding a small amount of additional randomness or by using multiple independent weak sources of random bits.

Wigderson’s solutions to problems often have applications to seemingly unrelated questions. Think of a Ramsey graph like a social network where there are no moderately-sized groups who are either all friends with each other, or none of whom are friends with each other. Extractors developed by Wigderson and his successors lead to new constructions of Ramsey Graphs that increased the size of the groups that were not all connected or disconnected.

Wigderson’s Legacy

The research I have discussed here encompass just a small part of Avi Wigderson’s wide-ranging work. He has over a hundred publications in the two major theoretical computer science conferences, the ACM Symposium on the Theory of Computing and the IEEE Symposium on the Foundations of Computer Science (a total much higher than that of any other researcher). Wigderson rarely works alone, with nearly 200 distinct co-authors.

In 1999, Wigderson joined the Institute of Advanced Study in Princeton as the Herbert H. Maass Professor in the School of Mathematics. Almost immediately, IAS became a center for research in computational complexity as Wigderson hosted visiting students, postdocs and visiting faculty. Many of today’s leaders in the field honed their skills at the institute.

Wigderson served on the scientific advisory board of the Simons foundation and his advocacy led to the creation of the Simons Institute for the Theory of Computing in Berkeley, California, itself a major center for the theory community.

In his 2019 book Mathematics and Computation: A Theory Revolutionizing Technology and Science published by Princeton University Press, Wigderson argued that the P versus NP problem, computational complexity, and theoretical computer science more broadly, together constitute a philosophy that is central not only to computer science but also biology, economics, physics and the social sciences. Nevertheless he has never shied away from defining the field as a serious mathematical discipline. When someone suggested that the implementer of an algorithm get as much credit as the one who discovered it, Wigderson quipped, “Let them try to do it first.”

Author: Lance Fortnow

THE A.M. TURING AWARD

THE A.M. TURING AWARD